2025

From Parameters to Prompts: Understanding and Mitigating the Factuality Gap between Fine-Tuned LLMs

Xuan Gong, Hanbo Huang, Shiyu Liang# (# corresponding author)

PreprintUnder review. 2025

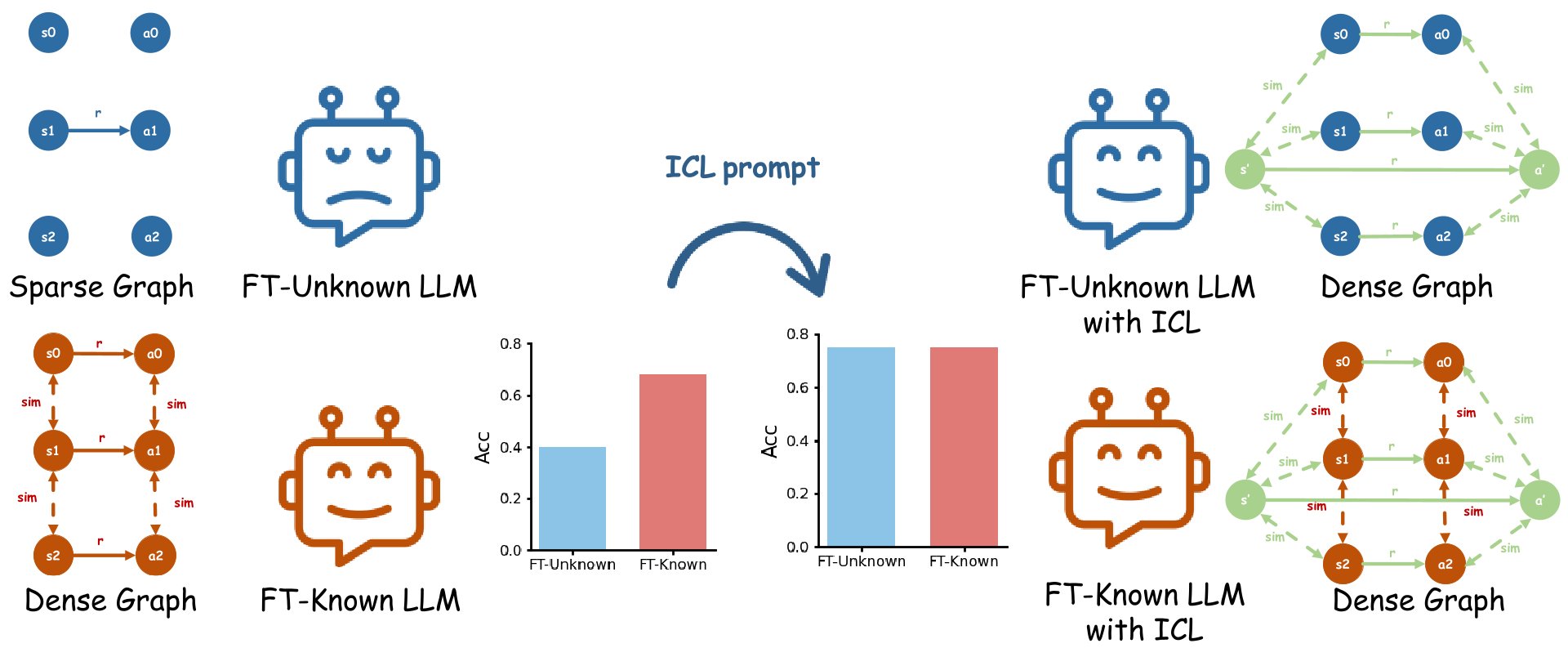

We revisit how supervised fine-tuning affects factual knowledge in LLMs, revealing a factuality gap between known and unknown knowledge. This gap can be mitigated at inference via in-context learning (ICL) or out-of-distribution prompts. Our theoretical and empirical results show that test-time prompts can overshadow fine-tuning data, suggesting ICL can compensate for poor fine-tuning and should be considered in evaluating fine-tuning strategies.

From Parameters to Prompts: Understanding and Mitigating the Factuality Gap between Fine-Tuned LLMs

Xuan Gong, Hanbo Huang, Shiyu Liang# (# corresponding author)

PreprintUnder review. 2025

We revisit how supervised fine-tuning affects factual knowledge in LLMs, revealing a factuality gap between known and unknown knowledge. This gap can be mitigated at inference via in-context learning (ICL) or out-of-distribution prompts. Our theoretical and empirical results show that test-time prompts can overshadow fine-tuning data, suggesting ICL can compensate for poor fine-tuning and should be considered in evaluating fine-tuning strategies.

2024

DAMRO: Dive into the Attention Mechanism of LVLM to Reduce Object Hallucination

Xuan Gong, Tianshi Ming, Xinpeng Wang, Zhihua Wei# (# corresponding author)

EMNLP 2024 Main

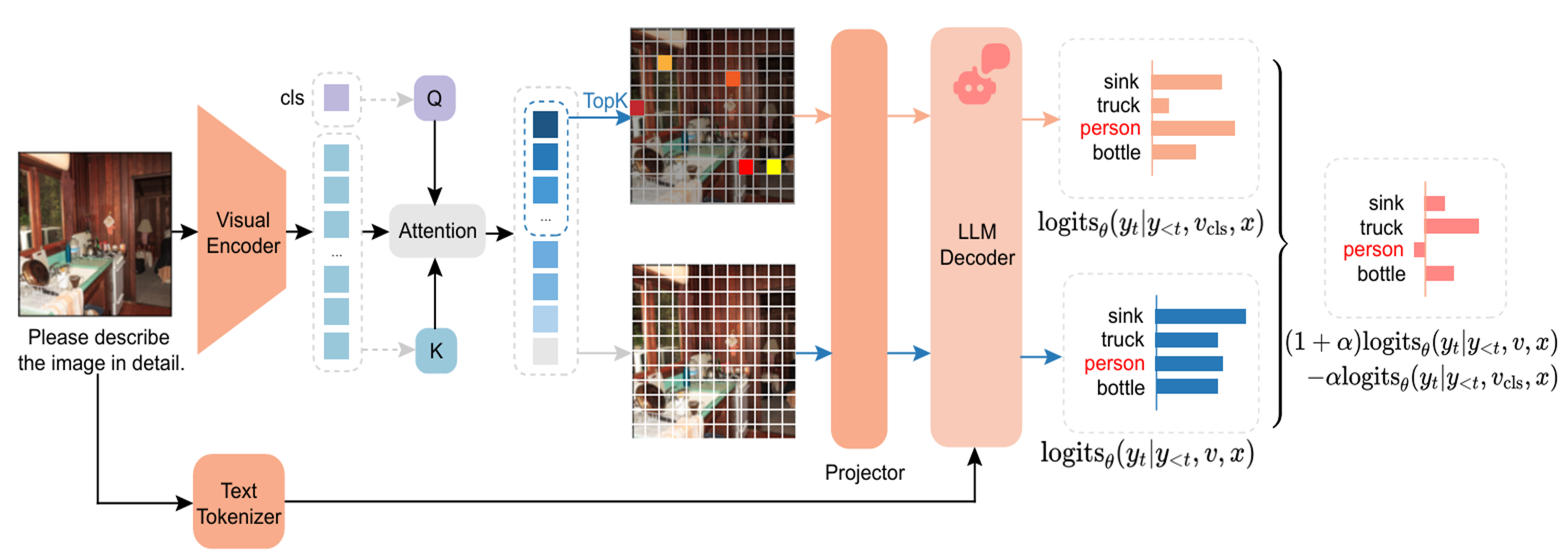

We propose DAMRO, a training-free method to reduce object hallucination in LVLMs by filtering misleading high-attention background tokens using the ViT CLS token. DAMRO significantly improves hallucination control on models like LLaVA and InstructBLIP across multiple benchmarks.

DAMRO: Dive into the Attention Mechanism of LVLM to Reduce Object Hallucination

Xuan Gong, Tianshi Ming, Xinpeng Wang, Zhihua Wei# (# corresponding author)

EMNLP 2024 Main

We propose DAMRO, a training-free method to reduce object hallucination in LVLMs by filtering misleading high-attention background tokens using the ViT CLS token. DAMRO significantly improves hallucination control on models like LLaVA and InstructBLIP across multiple benchmarks.